Halp & In Praise of Doing Non-utilitarian Things

I’m a firm believer in the fact that engineering and computer science have the potential to do tremendous good. However, doing everything in service of some utilitarian pursuit can get draining, at least for me. Which is why I occasionally like to hack on something super silly, not-useful, and most importantly, something fun, especially when I’m trying to learn something new.

For example, back in undergrad, as I was learning about the Linux Kernel and the Go programming language,

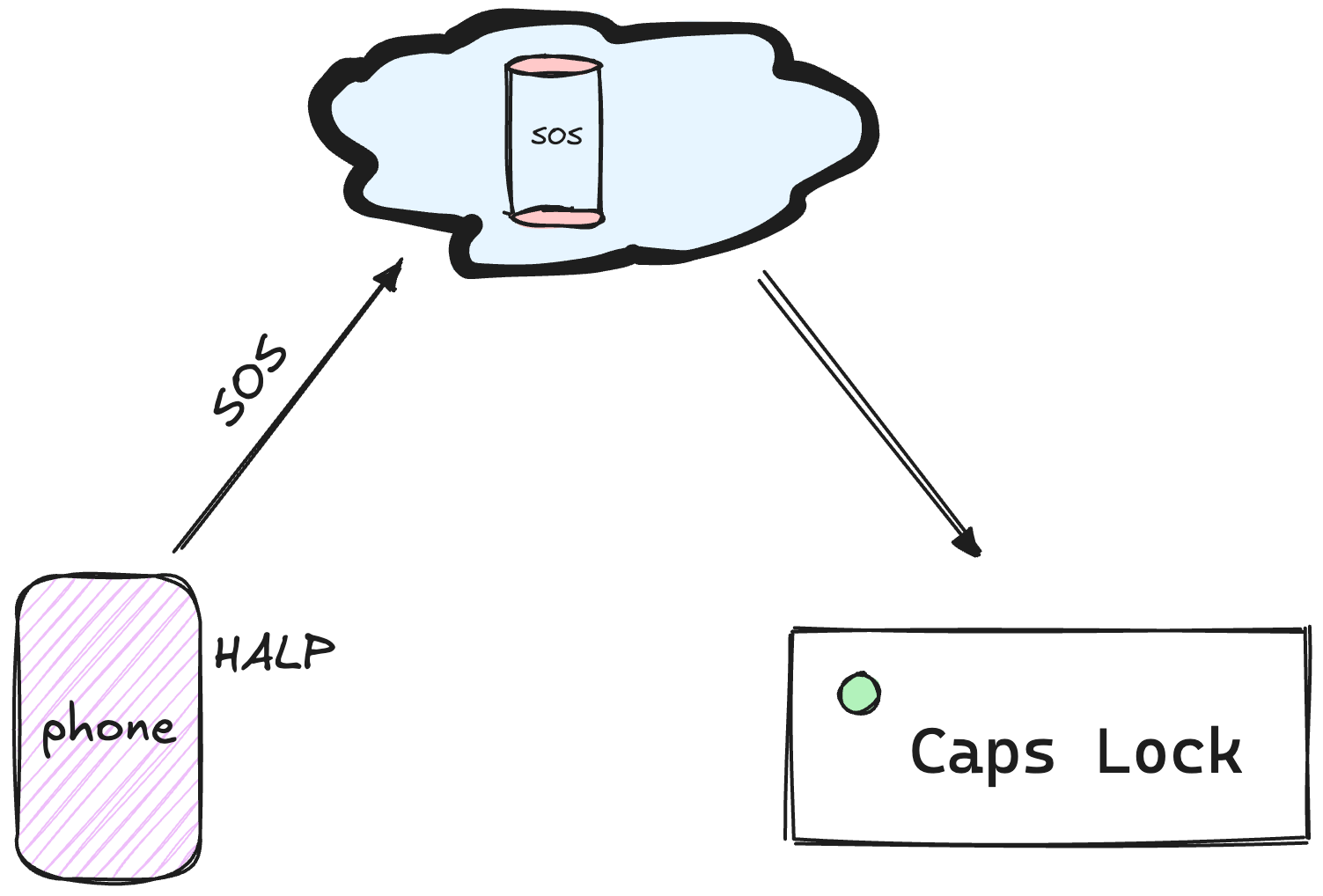

I built halp, a CLI tool that can play messages in Morse code

on your Capslock LED key! It does this by leveraging the fact that “everything” in Linux is a file. The state

of your Capslock LED resides in a file of the /sys subsystem (under /sys/class/leds), and you can toggle

this LED by writing to this file. I still get equally overjoyed and excited as I did 4 years ago when I think of this.

Fortunately, we had a small community of people who were also excited by goofy projects like this, and they contributed functionality to play messages in Morse code using a keyboard’s backlight and the screen’s brightness. Needless to say, I had a tremendous amount of fun with this. But more importantly, I remember how building this got me really excited about Go and systems in general, and I think that’s mostly because building something fun and mostly not-useful really takes away the pressure to get started and immediately build the “greatest thing to ever exist”.

5th Graders & Distributed Systems

Earlier this year, I got the chance to volunteer with the Girls Who Code chapter at UIUC,

and was asked to talk to 5th graders about what really excited me about Computer Science. Turns out it was the perfect excuse to repurpose

halp. With the help of Cloudflare, I built a small application

around halp where you could type in a message on your phone, and halp would take this message and do its thing with a laptop’s Capslock LED.

My goal was to get these students excited about our field and maybe along the way, teach them a little about distributed systems. Turns out, building the most over-the-top and fun thing I could think of was a great way to do that. (Un-)surprisingly, they had the most insightful questions that were rooted in concepts of syncrhonization across nodes, capacity planning, and throughput of database systems. If you get a chance to volunteer at your local chapter of Girls Who Code, I would highly recommend it!

Making Claude Halp

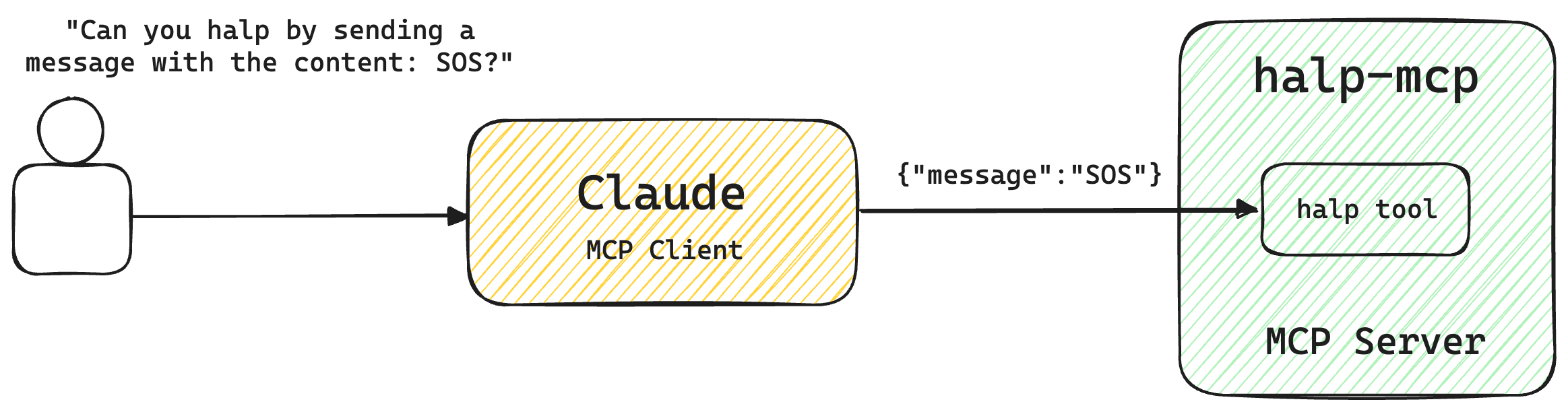

It was around the time I did the presentation with halp that MCP

was starting to get wildly popular and I was intrigued by the idea of it. I got super excited at the prospect of

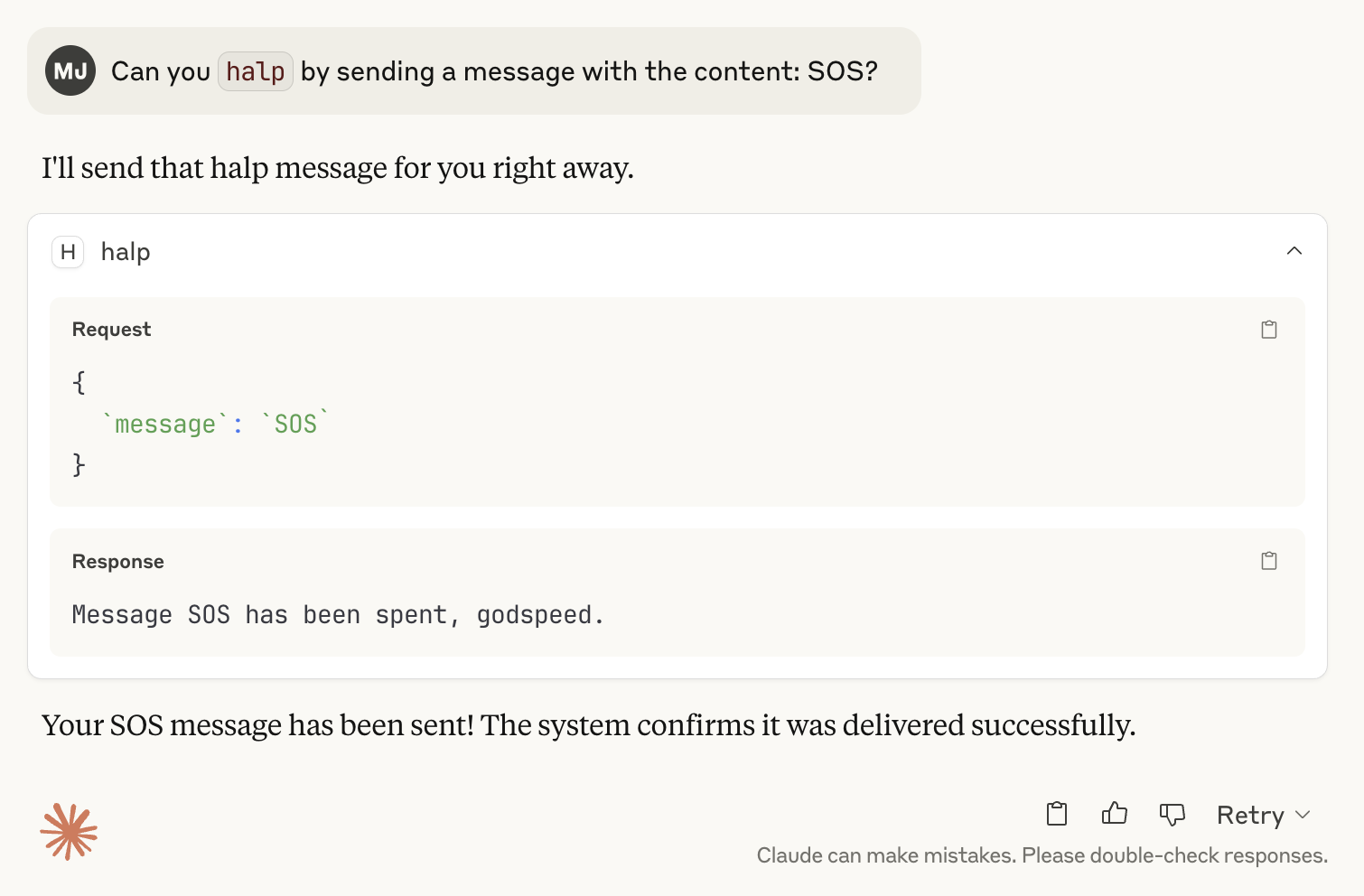

asking Claude: “Can you you halp by sending a message with the content: SOS?”

Needless to say, this should not be taken seriously. If you do need to send anything akin to an SOS, I hope you have the appropriate tools and means of doing so.

Although we’re just goofing around here, writing an MCP server for halp would allow a model to physically externalize

a user’s request which is quite powerful in my opinion. You could extend the same principle to other applications such

as asking the model to control your thermostat, dim your lights, or water your plants. Besides, it also seemed like the

perfect excuse to try out the official MCP Go SDK.

We can re-purpose much of the hello example from the go-mcp repository. Following the example, we can create a tool for halp that looks something like this:

// Structured arguments for our tool.

type HalpArgs struct {

// Message holds what our tool acts on.

Message string `json:"message"`

}

// ExecuteHalp is an mcp.ToolHandlerFor - handles tool calls for HalpArgs.

func ExecuteHalp(

ctx context.Context,

ss *mcp.ServerSession,

params *mcp.CallToolParamsFor[HalpArgs],

) (*mcp.CallToolResultFor[struct{}], error) {

msg := params.Arguments.Message

// Do something with msg.

return &mcp.CallToolResultFor[struct{}]{

Content: []mcp.Content{

&mcp.TextContent{

Text: fmt.Sprintf("Message %s has been spent, godspeed.", msg),

},

},

}, nil

}

That’s pretty cool, all tool call handlers are typed on the struct we expect to pass around back and forth from the model, automatically giving us type safety with the

messages we work with.

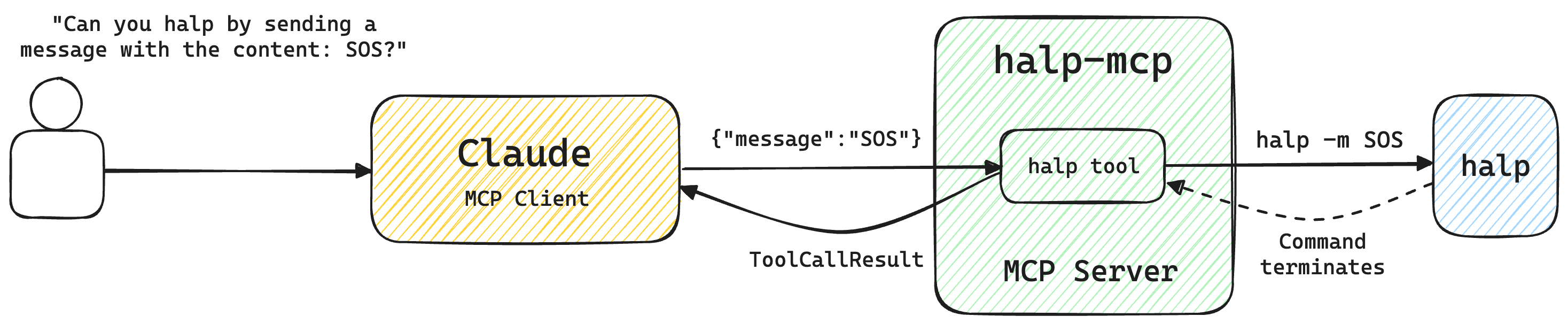

So far, we’ve gotten the message from Claude to the MCP server. Now we actually need to do something with the message.

Something to note here is that

halponly runs on Linux systems (because of the/syssubsystem), and you may need to use something like Un-Archived to run Claude Desktop on Linux machines.

If you’re running a Linux machine, we could just call into halp now that we have the message with us:

Our handler (ExecuteHalp) would then take the message, execute the halp command, wait for it to finish and return the

result/any error.

func ExecuteHalp(

...

) {

msg := params.Arguments.Message

// Call into halp.

err := exec.Command("halp", "-m", msg).Run()

if err != nil {

return nil, fmt.Errorf("could not execute `halp` command: %w", err)

}

return &mcp.CallToolResultFor[struct{}]{

Content: []mcp.Content{

&mcp.TextContent{

Text: fmt.Sprintf("Message %s has been spent, godspeed.", msg),

},

},

}, nil

}

All that’s left is creating a server (we can create a server with the STDIO transport to keep things simple)

and registering our tool with it:

func main() {

server := mcp.NewServer("halp-mcp", "v0.0.1", nil)

server.AddTools(mcp.NewServerTool("halp", "send halp message", ExecuteHalp, mcp.Input(

mcp.Property("message", mcp.Description("the halp message to send")),

)))

t := mcp.NewLoggingTransport(mcp.NewStdioTransport(), os.Stderr)

if err := server.Run(context.Background(), t); err != nil {

log.Printf("Server failed: %v", err)

}

}

The complete server implementation.

package main

import (

"context"

"fmt"

"log"

"os"

"os/exec"

"github.com/modelcontextprotocol/go-sdk/mcp"

)

type HalpArgs struct {

Message string `json:"message"`

}

func ExecuteHalp(

ctx context.Context,

ss *mcp.ServerSession,

params *mcp.CallToolParamsFor[HalpArgs],

) (*mcp.CallToolResultFor[struct{}], error) {

msg := params.Arguments.Message

// Call into halp.

err = exec.Command("halp", "-m", msg).Run()

if err != nil {

return nil, fmt.Errorf("could not execute `halp`: %w", err)

}

return &mcp.CallToolResultFor[struct{}]{

Content: []mcp.Content{

&mcp.TextContent{

Text: fmt.Sprintf("Message %s has been spent, godspeed.", msg),

},

},

}, nil

}

func main() {

server := mcp.NewServer("halp-mcp", "v0.0.1", nil)

server.AddTools(mcp.NewServerTool("halp", "send halp message", ExecuteHalp, mcp.Input(

mcp.Property("message", mcp.Description("the halp message to send")),

)))

t := mcp.NewLoggingTransport(mcp.NewStdioTransport(), os.Stderr)

if err := server.Run(context.Background(), t); err != nil {

log.Printf("Server failed: %v", err)

}

}

To tell claude about halp-mcp, first build the halp-mcp binary:

cd /path/to/halp-mcp

# Or go build -o halp-mcp .

go install .

And edit the claude_desktop_config.json file:

{

"mcpServers": {

"halp-mcp": {

"command": "/path/to/halp-mcp/binary"

}

}

}

PS: I’m sure I could have saved myself some strife here, but I found out after a non-trivial amount of time that

environment variables aren’t auto-expanded from the config file because I had initially tried to use $(GOROOT)/bin

for the halp-mcp binary path. So, if this is news to you, I hope you can avoid my mistakes.

PPS: If you need help debugging your MCP server logs, try using the Filesystem MCP server and asking Claude what’s going on in the corresponding log file after you take a look 😬.

That said, we should be good to go! Let’s restart Claude and see how we fare:

And finally, what does our user’s request, externalized onto a capslock LED, look like?

(although I don’t know how discernable the LED is in the below GIF, woops):

Can We Have More Fun With This?

What if I couldn’t run Claude Desktop on my Linux machine and had to use a Mac or a Windows machine for it?

Given that halp only runs on Linux, we now need to a way for our message to reach from one machine to

another. The demo for Girls Who Code that used a website to

ask for the message, did this by storing it in Cloudflare Workers KV. On

my Linux machine, I would then run a Go program

that simply polled the KV namespace for the message and once retrieved, would invoke halp on it.

We could do the same thing by making the tool handler in our MCP server use the Cloudflare API and create the key that

holds the message. But instead of using Cloudflare KV as storage, I decided to have some more fun with this and used

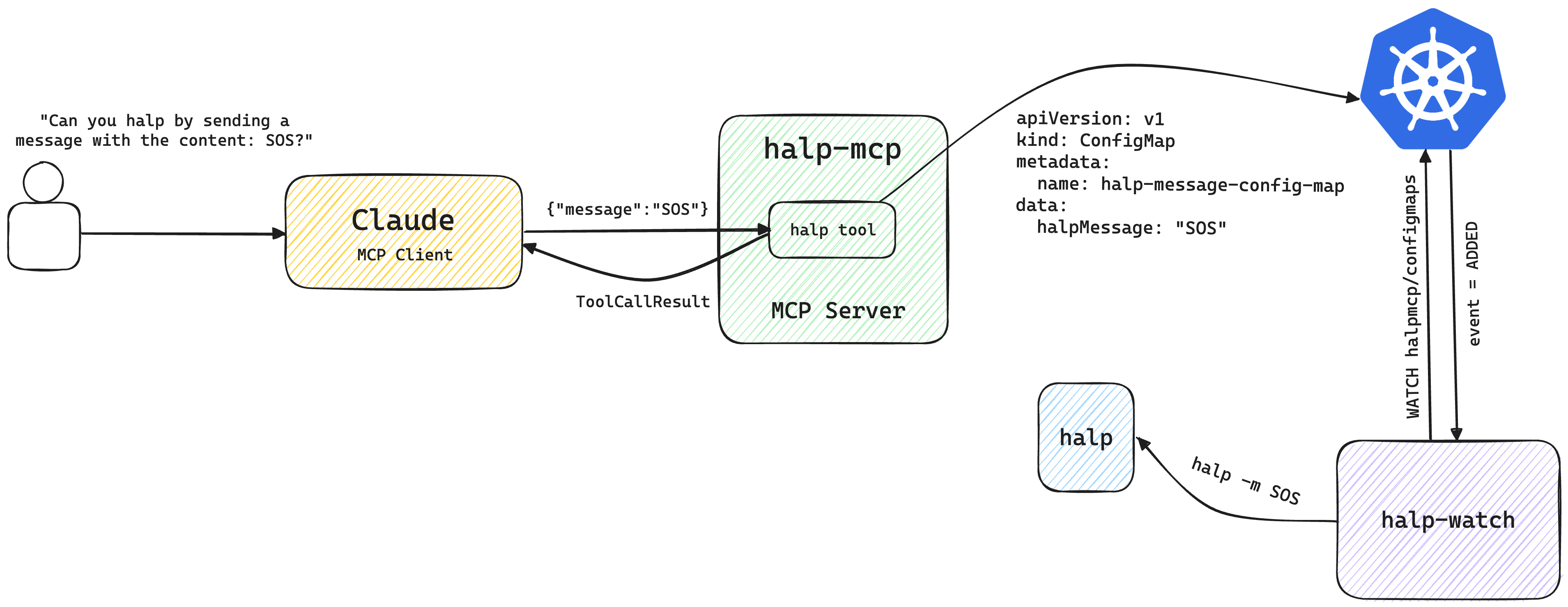

Kubernetes instead. The below implementation and method works regardless of where your Kubernetes

cluster is as long as your kubeconfig points to it.

The way we will store our message is using a ConfigMap where one

of the data keys is halpMessage and the value is the message our tool handler got. The ConfigMap for a message with

content SOS, would then look like this:

apiVersion: v1

kind: ConfigMap

metadata:

name: halp-message-config-map

data:

halpMessage: "SOS"

We can create our ConfigMap using Kubernetes client-go in our tool handler:

func ExecuteHalp(

...

) {

msg := params.Arguments.Message

// Create ConfigMap with message

_, err := createConfigMap(ctx, msg, mcpNamespace)

if err != nil {

return nil, fmt.Errorf("could not create K8s configMap: %w", err)

}

...

}

func createConfigMap(ctx context.Context, message, ns string) (*corev1.ConfigMap, error) {

// Consturct the client.

clientset, err := createKubernetesClient()

if err != nil {

return nil, err

}

// Create ConfigMap with the message.

configMap := &corev1.ConfigMap{

ObjectMeta: metav1.ObjectMeta{

Name: configMapName,

Namespace: ns,

},

Data: map[string]string{

"halpMessage": message,

},

}

result, err := clientset.CoreV1().ConfigMaps(ns).Create(

ctx,

configMap,

metav1.CreateOptions{},

)

if err != nil {

return nil, err

}

log.Printf("Created ConfigMap: %s in namespace: %s", configMapName, ns)

return result, nil

}

Now that halp-mcp is able to create a ConfigMap, we need a way to get this object and call halp. Recall that

in the previous iteration, we periodically polled Cloudflare KV to check if an object was present. Instead of repeated

polling, we can leverage the WATCH API

of Kuberentes. Using a WATCH, we get a long-lived connection on which events related to the object type we’re watch-ing

get streamed on. Given a continuous stream of events, we can now react to each of them.

For our purposes, what this means is when we WATCH for ConfigMaps and get an ADDED event signifying the creation

of a ConfigMap, we extract the message from it and call into halp. Note that there can be ConfigMaps being created

in the cluster regardless of our little experiment. In order to isolate events that are relevant to us, we create

ConfigMaps (in our tool handler) and WATCH for them in a dedicated Kubernetes namespace called halpmcp.

We can open a WATCH request using client-go as follows:

const mcpNamespace = "halpmcp"

func handleEvent(event watch.Event) {

switch event.Type {

case watch.Added:

cm, ok := event.Object.(*corev1.ConfigMap)

if !ok {

panic(fmt.Sprintf("received object not a ConfigMap: %#v", event.Object))

}

...

msg := cm.Data["halpMessage"]

log.Println("ConfigMap data:", cm.Data["halpMessage"])

if err := exec.Command("halp", "-m", msg).Run(); err != nil {

log.Fatalf("error trying to execute the halp command: %v", err)

}

}

}

func main() {

clientset, err := createKubernetesClient()

if err != nil {

log.Fatalf("Failed to create Kubernetes client: %v", err)

}

...

// Watch for ConfigMaps in the halpmcp namespace.

w, err := clientset.CoreV1().ConfigMaps(mcpNamespace).Watch(ctx, v1.ListOptions{

Watch: true,

ResourceVersion: "",

},

)

if err != nil {

log.Fatalf("error establishing watch: %v", err)

}

watchChan := w.ResultChan()

for {

select {

// Handle watch events.

case event := <-watchChan:

handleEvent(event)

case <-ctx.Done():

return

}

}

}

End-to-end, here’s what our system finally looks like:

Conclusion

I think its fair to say that I’ve had quite a bit of fun with this post. It really is an excellent way to get started learning about something new. While this was mainly meant as a learning exercise, I’ve been getting increasingly interested in aspects of deploying and running these servers in scalable and fault-tolerant setups, especially when things like memory and shared state come into play. Maybe I’ll do a follow-up post here!

That said, the halp-mcp and WATCH implementation can be found here.

Please feel free to contact me if there are improvements or corrections I can make, or if you’d just like to chat!